How we create infrastructure for the corporate clients?

An interview with Łukasz Tomaszkiewicz, Senior Cloud Infrastructure Architect, on a pragmatic approach to creating an infrastructure for corporate clients.

ŁC: Hi! We’re meeting today to discuss the benefits of a pragmatic approach to setting up infrastructure and leveraging cloud computing capabilities for enterprise clients, such as banks.

ŁT: Hello. That’s right, I’ll be talking about improving information security, automating software development processes and accelerating corporate client applications.

Let me add at the outset that we started a partnership with our client about 4 years ago with an infrastructure audit. We discovered that there was a lot to improve or work out from scratch.

What was the increase in information security?

We implemented an approach to divide environments into accounts at the AWS level. This structure ensures that individual accounts are isolated from each other, thereby increasing security. Additionally, the client gains greater cost transparency. One can see what are the expenditures broken down into individual applications and environments. As a result, we simplified the infrastructure management.

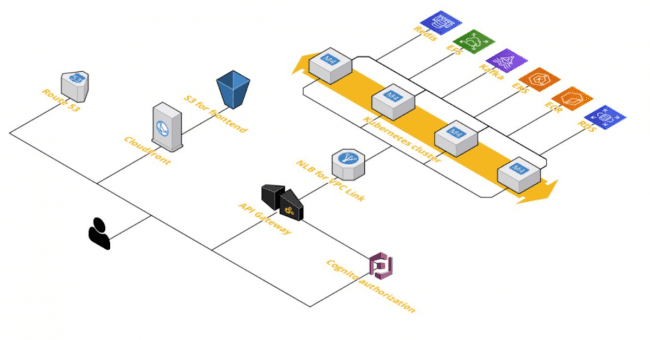

We removed Elastic beanstalk, because the solution was not optimal for the applications the customer was running and caused a lot of problems, thus slowing down the deployment of new features. We deployed an ECS service-based infrastructure at the beginning for the more advanced cases that emerged in the later stages of the project – clusters built on Kubernetes.

What has been automated?

We wanted to automate the entire application deployment procedure. Thanks to our work, the code created by the programmer from the moment of uploading it to the repository to the launch of the product version was processed automatically. This is important in terms of system security and business security. In other words, the knowledge of implementing a new version of the application is not dispersed among developers, but turned into code that executes the process automatically.

How did you accelerate the application?

Looking at the system architecture, we put Cloudfront in front of all the applications. This gave us an extra security layer because Cloudfront protects against distributed denial-of-service attacks. At the same time, it provides Content Delivery Network mechanisms to create local copies of infrequently changing objects close to the end-customer. This makes the application load much faster, which is important for applications that the customer uses.

What about the problem of managing a growing application environment?

Because the customer teams knew Jenkins best and could automate it efficiently using DSL, we introduced an additional instance of Jenkins, which we called Management. This Jenkins differed from typical Jenkins in that it did not build software, but instead ran automated playbooks written in Ansible, among other things. In other words, we created a central point where the management of all environments took place.

Additionally, it was possible to set particular things to run either periodically (for example, backups, security verifications of environments) or on demand (for example, playbooks related to updates of particular operating systems).

We chose Ansible as the leading tool to automate the entire infrastructure at an operational level. This gave us a lot of benefits. First, Ansible is more transparent than manually created scripts. Second, it provides a lot of ready-made modules that you can use to accelerate software deployment. Ansible allows you to orchestrate tasks between machines. We used it to handle particular instances, deployment stuff, as well as cloud database configuration.

What about machine updates?

The machines were updated with Ansible, but what is important is that there are two types of machines. The first type requires maintenance. These are, for example, servers supporting Active Directory along with Federation Services. These machines have to be taken care of, because they store data and configuration of services. On the other hand, we had clusters where applications were running, within autoscaling groups. In other words, the number of available resources adjusted dynamically to the growing load. In order to ensure the security of these machines, we used Packer, which in conjunction with Ansible generated so-called golden images, on the basis of which we put the application clusters.

What practices did you use to design the infrastructure?

We designed architecture on the basis of industry best practices and AWS’s recommendations, such as Well Architected Framework. We also used my experience from previous projects in the AWS cloud – including infrastructure implementation for two foreign brokerage houses.

How have you used the ‘Infrastructure as a code’ approach?

All the infrastructure was created in this approach, in which all the resources are created in code. Then, using dedicated tools (for example, Terraform) we deploy the infrastructure so described to the cloud. This gave us a lot of following benefits.

First, actions taken in application became auditable. Every code change leaves a trace in the repository. It is possible to see who changed, what they changed and how they changed it. Secondly, the ability to detect turnouts in the configuration. If someone manually makes a modification on the cloud side it creates a difference to the code, which we can easily detect and eliminate.

Third, repeatability. Once written code can be used repeatedly for different environments. When creating new solutions, you can rely on ready-made components, which significantly accelerate the process of implementing infrastructure for applications.

How did you implement log management?

For log processing, we chose a solution that is secure and convenient for developers. We implemented a so-called EFK stack, i.e. Elasticsearch, Fluentbit and Kibana for application data, while for cloud logs we implemented solutions based on Kinesis Firehose to ensure optimal log data parsing. These solutions collected application logs and forwarded them to a central location allowing for quick and convenient diagnosis of application problems.

How have you organized the management of user accounts?

On a base from Active Directory along with Federation Services, we implemented a solution that enabled login to all AWS accounts, as well as business applications available to the customer’s employees. It gave us a lot of benefits because the entire user lifecycle management process in the organization could be done in a central place. We removed the need to log into multiple services and places and replaced them with a slick Single Sign-on solution.

With good configuration and role definitions, we were able to assign permissions per application. At the group level in Active Directory, we were modeling Role Based Access Control, which mapped to actual roles whether in AWS or in individual applications.

What about MFA implementation?

For security reasons it was necessary to implement an MFA solution. After analyzing available solutions it turned out that there are a lot of vendors who provide their MFA solution at a price of 10 USD per user per month. For a hundred users in a year, it makes a significant cost for the customer. Wanting to avoid this, we decided to write an add-on to Active Directory Federation Services, which implemented the MFA functionality using the TOTP protocol, which supported the popular Google Authenticator. In summary, we not only configured the infrastructure but in order to reduce the cost of the license, we produced the missing elements for this infrastructure.

How did you configure application security monitoring?

It’s worth noting that our client had high security requirements. We introduced a whole set of products related to monitoring, risk detection and prevention. Among others, we implemented Guard Duty – a solution that automatically detects anomalies in networks and service configuration. We implemented AWS Inspector – a tool to detect vulnerabilities in the configuration of instances, as well as anti-malware tools from external vendors. To verify the correct configuration on the infrastructure side, we used AWS Config. This tool allows us to define security rules and independently of the rest of the platform verifies these rules for compliance.

What about databases?

We implemented relational databases based on managed service called RDS. This solution provides easy at rest oraz in transit encryption and passwordless authentication as well as automatic snapshot backups.

How have you prepared the infrastructure to defend against the most common attacks on web applications?

In order to meet the security requirements and to protect against the most popular attacks on web applications, we implemented the web application firewall solution, which in combination with other services implemented within the infrastructure provided a solid security layer.

How did you organize the integration between offices in different regions and the cloud?

We opted for advanced networking. It was the answer to the need of connecting offices from different regions and providing access to resources in the cloud. After analyzing the requirements, we decided to implement a transit gateway solution – a hub, which connects networks in AWS and also allows us to connect VPN tunnels site-to-site.

How would you summarize all the customer benefits of our pragmatic approach?

A very high level of system security. Wherever there is a business case – reducing dependence on external vendors by creating your own components, centralizing identity management and reducing costs. High level of automation. At one point, de facto the entire infrastructure was handled by one person.

Customer was convinced of our high competence, trusted us and used the possibilities of AWS cloud to a large extent.