What is Data Tokenization and How Does It Differ from Encryption?

Data security is more important than ever. With increasing cyber threats and strict regulations, protecting sensitive information is a top priority for businesses. This is where data tokenization comes into play. But what exactly is data tokenization, and how does it differ from other security measures like encryption? Let’s dive in and find out.

Key Points

|

What is Data Tokenization?

Definition and Basic Concept

Data tokenization is the process of protecting sensitive data by replacing it with unique identification symbols, known as tokens. These tokens have no meaningful value on their own and cannot be reverse-engineered to reveal the original confidential data. Essentially, tokenization swaps valuable data with a string of characters that are useless without proper authorization.

Importance in the Current Digital Landscape

Nowadays, information breaches and cyberattacks are common threats. Personal information, financial details, and other confidential data are prime targets for hackers. Tokenization can help mitigate these risks by ensuring that even if data is intercepted, it remains unusable to unauthorized parties.

Tokenization is crucial not just for security but also for regulatory adherence. Various regulations, such as the Payment Card Industry Data Security Standard (PCI DSS), require businesses to protect customer data. Data tokenization solutions help meet these standards, making it easier for companies to comply with legal requirements. This is a prime example of how blockchain technology can provide real value by enabling the management of assets in a secure, transparent, and efficient manner.

How Data Tokenization Works

Tokenization Process Overview

Data tokenization is a straightforward yet powerful process. Here’s a simple breakdown of how it works:

- Data Identification: Identify the confidential data that needs protection, such as credit card numbers or personal information.

- Token Generation: Replace the confidential data with a token. This token is a random string of characters with no inherent value.

- Token Mapping: Store the relationship between the source data and its token in a secure database, often called a token vault.

- Data Usage: Use the token in place of the actual data in your systems. When you need to retrieve the unprocessed data, an authorized system can map back from the token to the confidential data using the token vault.

About Token Generation and Mapping Systems

Token generation is at the heart of data tokenization. Tokens are generated using algorithms that ensure they are unique and unpredictable. There are no patterns or clues that can link a token back to its original dataset without access to the token vault.

The mapping system, or token vault, is a secure database that keeps track of which tokens correspond to which pieces of sensitive data. Access to the token vault is strictly controlled, so only authorized systems can retrieve the input data when necessary. This separation ensures that even if tokens are intercepted, they are useless without the token vault.

How Tokenization Improves Data Security

One of the most common uses of data tokenization is in payment processing. When you make a purchase online, your credit card data is tokenized. The token is used to process the transaction, while the actual card number is safely stored in a secure token vault. This way, even if the transaction data is intercepted, your card information remains protected.

In data storage, businesses often tokenize confidential information like Social Security numbers or personal addresses. The masked data can be stored and processed without risk, as the actual information is safely locked away. When needed, the business can use the token vault to retrieve the original data.

Types of Tokens

Tokens come in two main types: deterministic and non-deterministic.

Deterministic Tokens: These tokens always generate the same token for a given piece of data. This is useful when you need consistency, such as in databases where you might need to match or search for specific data.

- Deterministic tokens are often used in scenarios where data needs to be consistently referenced. For instance, in customer loyalty programs, deterministic tokens allow the system to recognize repeat customers without storing their actual sensitive information.

Non-Deterministic Tokens: These tokens generate a different token each time, even for the same piece of data. This provides an extra layer of security, making it even harder for unauthorized parties to guess or reverse-engineer the source data.

- Non-deterministic tokens are ideal for situations requiring the highest level of security. For example, in high-value transactions or where sensitive personal information is involved, non-deterministic tokens ensure that even if tokens are intercepted, they cannot be linked back to the source data.

Tokenization Use Cases

Here are some key areas where tokenization proves invaluable:

Finance and Payment Processing

In the finance sector, tokenization is a game-changer. Visa Token Service (VTS) is a prime example where Visa replaces your actual credit card number with a unique digital token for online transactions. Merchants never see your real card details, reducing the risk of data breaches. When you make an online purchase, your credit card number is replaced with a token. This token is used to complete the transaction, while your actual card number is securely stored in a token vault. This process ensures that even if transaction data is intercepted, your confidential information remains safe. By minimizing the exposure of real card details, tokenization significantly reduces the risk of fraud and data breaches. If you’re looking to implement secure payment methods, consider our payment gateway integration services.

Healthcare

Healthcare providers handle vast amounts of sensitive patient data. Protecting this information is critical to maintaining patient trust and meeting regulatory requirements like HIPAA. HyTrust Healthcare Data Platform utilizes tokenization to anonymize patient data while enabling secure access for authorized users. This helps healthcare providers comply with HIPAA regulations while facilitating efficient data analysis for research and care improvement. Tokenization helps healthcare organizations secure patient records by replacing personal information with tokens. This way, patient data can be shared and processed without exposing the actual details, ensuring privacy and compliance with legal standards.

Social Media and Digital Identity

Social media platforms and digital identity services use tokenization to protect user data. Apple Sign In with Sign-in with Apple allows users to sign in to apps and websites without sharing their email address with the app developer. Apple generates a unique token for each app, protecting user privacy. Personal information, such as email addresses or phone numbers, is tokenized to prevent unauthorized access. This practice ensures that user data remains secure, even if the platform is compromised. Additionally, tokenization facilitates seamless transitions between different platforms, enhancing user experience while maintaining security.

Supply Chain Management

In supply chain management, tokenization helps track and verify product authenticity. Everledger uses blockchain technology and tokenization to track the origin and movement of diamonds. Each diamond receives a unique, tamper-proof token that records its provenance, ensuring authenticity and ethical sourcing for consumers. By tokenizing product information, businesses can ensure that data about the origin, movement, and handling of goods remains secure. This not only protects against fraud but also enhances the integrity and transparency of the supply chain.

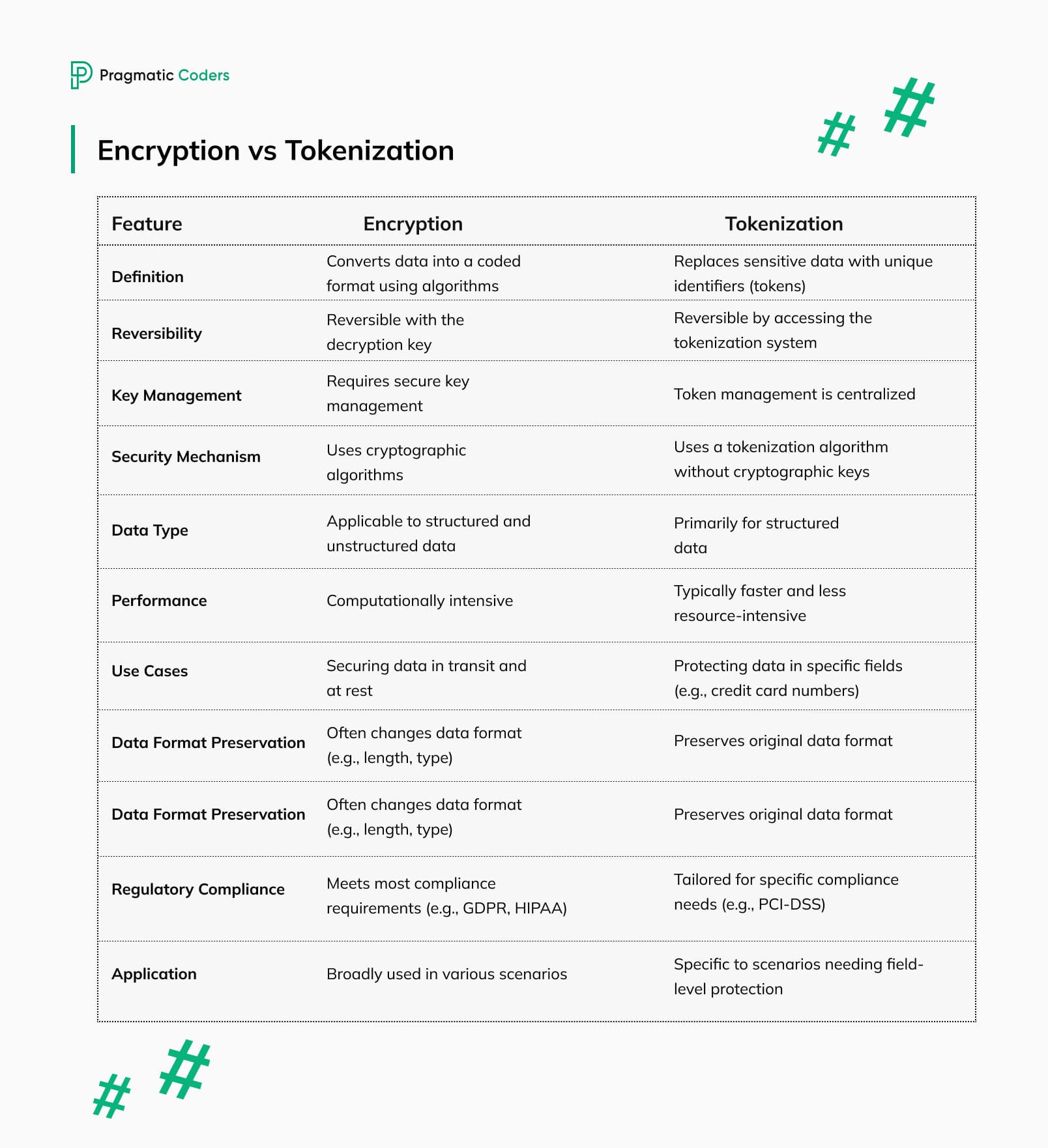

Tokenization vs Encryption

While both tokenization and encryption are used to protect sensitive data, they operate in distinct ways and serve different purposes.

Tokenization replaces sensitive data with non-sensitive tokens. These tokens have no meaning on their own and cannot be used to reverse-engineer the input data without access to a secure token vault. Tokenization is often used in scenarios where data needs to be stored or processed securely but doesn’t require frequent access in its original form. For example, tokenization is commonly used in payment processing to protect credit card information.

Data Encryption, on the other hand, converts data into a coded format that can only be deciphered with a specific key. Encryption is ideal for protecting data that needs to be accessed and used regularly, such as emails, documents, and files that require secure transmission over the internet.

When to Use Data Encryption vs. Tokenization

Use Tokenization When:

- You need to store sensitive data securely, such as in databases or payment systems.

- Regulatory adherence requires you to protect certain types of data, like credit card information under PCI DSS.

- You want to minimize the risk of information breaches by ensuring that intercepted data is meaningless without access to the token vault.

Use Encryption When:

- You need to securely transmit data over the internet, like emails or online transactions.

- Protecting data at rest on devices like laptops or mobile phones.

- Data needs to be accessed and used in its original form but must remain secure from unauthorized access.

Benefits of Tokenization and Encryption

Tokenization Benefits:

- Simplified Compliance: Tokenization helps meet regulatory requirements by ensuring that sensitive data is never stored or transmitted in its original form.

- Reduced Risk: If a breach occurs, the stolen tokens are useless without the token vault.

- Efficiency: Masked data can be processed and analyzed without compromising security.

Encryption Benefits:

- Data Security: Encryption provides a strong layer of protection for data both in transit and at rest.

- Access Control: Only those with the decryption key can access the original dataset, ensuring tight control over who can view or use the data.

- Versatility: Encryption can be applied to a wide range of data types and usage scenarios.

About Post-Compromise Data Protection

One of the significant advantages of tokenization is its ability to limit data exposure even after a breach.

When a system using tokenization is compromised, the attackers only gain access to the tokens, not the actual sensitive data. Since tokens are meaningless without the token vault, the impact of the breach is significantly reduced. This is particularly important in industries like finance and healthcare, where the consequences of data breaches can be severe.

In contrast, if encrypted data is compromised, the security depends on the strength of the encryption and the protection of the encryption keys. If attackers manage to obtain the decryption keys, they can access the source dataset. Therefore, while encryption is powerful, it also requires rigorous key management practices to maintain its effectiveness.

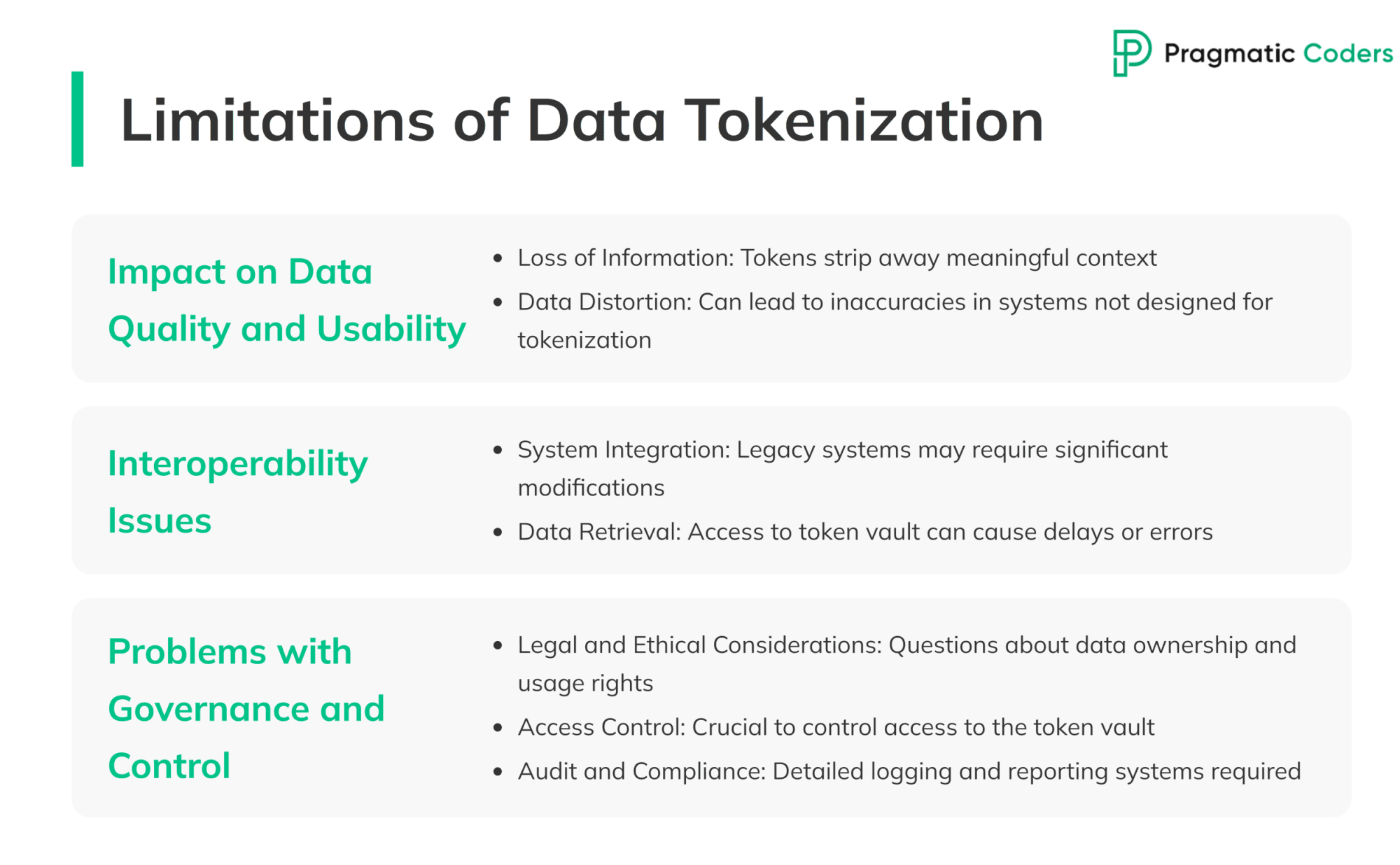

Limitations of Data Tokenization

Impact on Data Quality and Usability

Tokenization systems are great for security, but they can sometimes affect data quality and usability. When sensitive data is replaced with tokens, it loses its original format and context. This can lead to potential issues:

- Loss of Information: While tokens protect the data, they strip away meaningful context. For instance, a tokenized credit card number doesn’t reveal any information about the card issuer or the account holder. This can make it challenging for businesses that rely on such information for analytics or reporting.

- Data Distortion: Using tokens instead of raw data can lead to inaccuracies in systems that weren’t designed to be used alongside tokenization solutions. This can cause problems in applications that require precise data formats or need to perform calculations based on the original data.

Interoperability Issues

Integrating tokenization into existing systems can be complex. Here are some common challenges:

- System Integration: Many legacy systems weren’t built with tokenization in mind. Integrating tokenization into these systems often requires significant modifications, which can be costly and time-consuming.

- Data Retrieval: Retrieving the original data from tokens requires access to the token vault. If systems aren’t properly configured to handle this process, it can lead to delays or errors in data retrieval, impacting business operations.

Problems with Governance and Control

Managing tokenized data involves several governance and control challenges:

- Legal and Ethical Considerations: Tokenization involves replacing original data with tokens, which raises questions about data ownership and usage rights. Businesses must ensure they comply with legal and ethical standards when handling masked data.

- Access Control: Controlling access to the token vault is crucial. Only authorized personnel should have the ability to map tokens back to their original data. This requires robust access control mechanisms and constant monitoring to prevent unauthorized access.

- Audit and Compliance: Keeping track of who accesses the token vault and how tokens are used is essential for audit and compliance purposes. Businesses must implement detailed logging and reporting systems to ensure they meet regulatory requirements and can demonstrate compliance during audits.

Conclusion

Data tokenization enhances security and compliance by replacing confidential information with meaningless tokens. This protects against breaches, simplifies compliance, and improves data handling. Implementing tokenization can present challenges like data distortion and integration issues. However, it effectively reduces fraud and secures sensitive data. This makes it beneficial for modern data protection.